Chapter 5 Let’s boost the models

Beside the XGBoost model also a random forest model will be fitted here for comparison. The whole modeling approach and procedure is closely following the principles that are outlined in the great book Tidy modeling with R by Max Kuhn and Julia Silge.

For the modeling three additional packages are required.[5, 17, 18]

library(xgboost) # for the xgboost model

library(ranger) # for the random forest model

# + the vip package but it will not be loaded into

# the namespaceFor parallel computations the doParallel package is used.[19]

This is most useful for the tuning part.

library(doParallel)

# Create a cluster object and then register:

cl <- makePSOCKcluster(2)

registerDoParallel(cl)First one has to set a metric for the evaluation of the final performance. As one knows from the EDA that not a lot of outliers are present in the data one can leave the default for XGBoost as the optimization metric for regression which is the root mean squared error (RMSE) which basically corresponds to the \(L_2\) loss. Besides also the mean average error (MAE) which is kind of the \(L_1\) norm will be covered but the optimization and tuning will focus on the RMSE.

5.1 Burnout data

5.1.1 Baseline models

The first thing is to set up the trivial baseline models.

# the trivial intercept only model:

bout_predict_trivial_mean <- function(new_data) {

rep(mean(burnout_train$burn_rate), nrow(new_data))

}

# the trivial scoring of mental fatigue score (if missing intercept model)

bout_predict_trivial_mfs <- function(new_data) {

# these two scoring parameters are correspondint to the

# simple linear regression model containing only one predictor

# i.e. mfs

pred <- new_data[["mental_fatigue_score"]] * 0.097 - 0.1

pred[is.na(pred)] <- mean(burnout_train$burn_rate)

pred

}The predictions of these baseline models on the test data set will be compared with the predictions of the tree-based models that will be constructed.

5.1.2 Model specification

# Models:

# Random forest model for comparison

bout_rf_model <- rand_forest(trees = tune(),

mtry = tune()) %>%

set_engine("ranger") %>%

set_mode("regression")

# XGBoost model

bout_boost_model <- boost_tree(trees = tune(),

learn_rate = tune(),

loss_reduction = tune(),

tree_depth = tune(),

mtry = tune(),

sample_size = tune(),

stop_iter = 10) %>%

set_engine("xgboost") %>%

set_mode("regression")# Workflows: model + recipe

# Random Forest workflow

bout_rf_wflow <-

workflow() %>%

add_model(bout_rf_model) %>%

add_recipe(burnout_rec_rf)

# XGBoost workflow with the untransformed target

bout_boost_wflow <-

workflow() %>%

add_model(bout_boost_model) %>%

add_recipe(burnout_rec_boost)

# XGBoost workflow with the transformed target (empirical logit)

bout_boost_wflow_trans <-

workflow() %>%

add_model(bout_boost_model) %>%

add_recipe(burnout_rec_boost_trans)5.1.3 Tuning

For the hyperparameter tuning one needs validation sets to monitor the models on unseen data. To do this 5-fold cross validation (CV) is used here.

# Create Resampling object

set.seed(2)

burnout_folds <- vfold_cv(burnout_train, v = 5)Now adjust or check the hyperparameter ranges that the tuning will use. First the Random forest model.

# Have a look at the hyperparameters that have to be tuned and finalize them

bout_rf_params <- bout_rf_wflow %>%

parameters()

bout_rf_params ## Collection of 2 parameters for tuning

##

## identifier type object

## mtry mtry nparam[?]

## trees trees nparam[+]

##

## Model parameters needing finalization:

## # Randomly Selected Predictors ('mtry')

##

## See `?dials::finalize` or `?dials::update.parameters` for more information.This shows that the mtry hyperparameter has to be adjusted depending on the data. Moreover with the dials::update function one can manually set the ranges that should be used for tuning.

# default range for tuning is 1 up to 2000 for the trees argument

# set the lower bound of the range to 100. Then finalize the

# parameters using the training data.

bout_rf_params <- bout_rf_params %>%

update(trees = trees(c(100, 2000))) %>%

finalize(burnout_train)

bout_rf_params## Collection of 2 parameters for tuning

##

## identifier type object

## mtry mtry nparam[+]

## trees trees nparam[+]bout_rf_params %>% pull_dials_object("mtry")## # Randomly Selected Predictors (quantitative)

## Range: [1, 10]bout_rf_params %>% pull_dials_object("trees")## # Trees (quantitative)

## Range: [100, 2000]Now this parameter object is ready for tuning and the same steps have to be performed on the main boosting workflow. The parameter set for the untransformed target can then also be used for the transformed one.

bout_boost_params <- bout_boost_wflow %>%

parameters()

bout_boost_params ## Collection of 6 parameters for tuning

##

## identifier type object

## mtry mtry nparam[?]

## trees trees nparam[+]

## tree_depth tree_depth nparam[+]

## learn_rate learn_rate nparam[+]

## loss_reduction loss_reduction nparam[+]

## sample_size sample_size nparam[+]

##

## Model parameters needing finalization:

## # Randomly Selected Predictors ('mtry')

##

## See `?dials::finalize` or `?dials::update.parameters` for more information.# first a look at the default ranges

trees()## # Trees (quantitative)

## Range: [1, 2000]tree_depth()## Tree Depth (quantitative)

## Range: [1, 15]learn_rate()## Learning Rate (quantitative)

## Transformer: log-10

## Range (transformed scale): [-10, -1]loss_reduction()## Minimum Loss Reduction (quantitative)

## Transformer: log-10

## Range (transformed scale): [-10, 1.5]sample_size()## # Observations Sampled (quantitative)

## Range: [?, ?]So sample_size must also be finalized. Again the lower bound on the number of trees will be raised to 100. The other scales are really sensible and will be left as is.

bout_boost_params <- bout_boost_params %>%

update(trees = trees(c(100, 2000))) %>%

finalize(burnout_train)

bout_boost_params## Collection of 6 parameters for tuning

##

## identifier type object

## mtry mtry nparam[+]

## trees trees nparam[+]

## tree_depth tree_depth nparam[+]

## learn_rate learn_rate nparam[+]

## loss_reduction loss_reduction nparam[+]

## sample_size sample_size nparam[+]bout_boost_params %>%

pull_dials_object("sample_size")## Proportion Observations Sampled (quantitative)

## Range: [0.1, 1]bout_boost_params %>%

pull_dials_object("mtry")## # Randomly Selected Predictors (quantitative)

## Range: [1, 10]# use the same object for the tuning of the boosted model

# with the transformed target

bout_boost_params_trans <- bout_boost_paramsNow also these parameter objects are ready to be used. The next step is to define the metrics used for evaluation while tuning.

# define a metrics set used for evaluation of the hyperparameters

regr_metrics <- metric_set(rmse, mae)Now the actual tuning begins. The models will be tuned by a space filling grid search. As one has defined ranges for each parameter one can then use different algorithms to construct a grid of combinations of parameter values that tries to best fill the defined ranges by random sampling also in a high dimensional setting. In the following the function tune::grid_latin_hypercube is used for this. So basically one tries out all hyperparameter values for each fold and saves the performance of the performance metrics on the hold out fold. These metrics are then aggregated for each combination and with the resulting estimates of performance one can choose the final set of hyperparameters. The more hyperparameters the model has the more tuning rounds might be needed to refine the grid. Besides the classical grid search there are also iterative methods for tuning. These are mainly good to tune a single hyperparameter at once and not for a bunch of them simultaneously. They will not be used in the following.

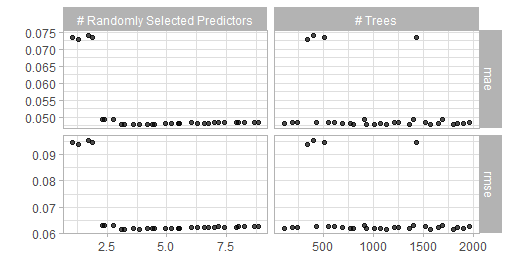

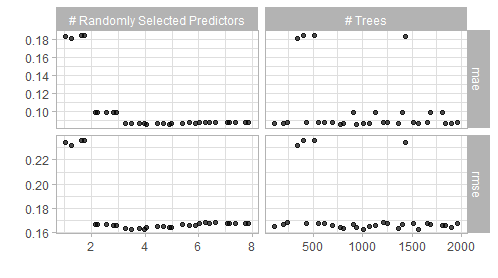

The random forest model goes first with the tuning.

Here there are as seen above only two hyperparameters to be tuned thus 30 combinations should suffice.

# took roughly 30 minutes

system.time({

set.seed(2)

bout_rf_tune <- bout_rf_wflow %>%

tune_grid(

resamples = burnout_folds,

grid = bout_rf_params %>%

grid_latin_hypercube(size = 30, original = FALSE),

metrics = regr_metrics

)

})

# visualization of the tuning results (snapshot of the output below)

autoplot(bout_rf_tune) + theme_light()

# this functions shows the best combinations wrt the rmse metric of all the

# combinations in the grid

show_best(bout_rf_tune, metric = "rmse")

Figure 5.1: Result of a spacefilling grid search for the random forest model.

The visualization alongside the best performing results suggest that a value of mtryof 3 and 1000 trees should give good results. Thus one can finalize and fit this model.

final_bout_rf_wflow <-

bout_rf_wflow %>%

finalize_workflow(tibble(

trees = 1000,

mtry = 3

)) %>%

fit(burnout_train)Now the main boosting model.

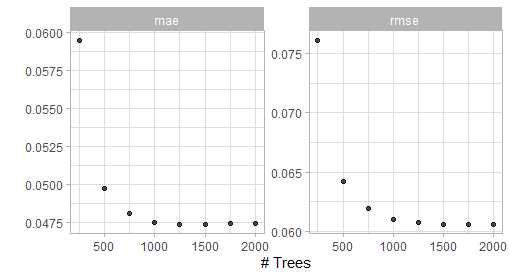

First tune only the number of trees in order to detect a number of trees that is ‘large enough.’ Then tune the tree specific arguments. If one tunes all parameters at the same time the grid grows to large. Moreover a tuning of the trees argument could encourage overfitting. See the book Hands-on Machine Learning with R for a detailed explenation of the tuning strategies.[2]

The first tuning round:

To display the discussed impact of different maximum tree depths the first grid search for a good number of trees will besides the kind of standard value 6 also be performed for regression stumps i.e. a value of 1 for the maximum tree depth.

# tuning grid just for the #trees one with max tree depth 6 and one with

# only stumps for comparison

first_grid_boost_burn_depth6 <- crossing(

trees = seq(250, 2000, 250),

mtry = 9,

tree_depth = 6,

loss_reduction = 0.000001,

learn_rate = 0.01,

sample_size = 1

)

first_grid_boost_burn_stumps <- crossing(

trees = seq(250, 2000, 250),

mtry = 9,

tree_depth = 1,

loss_reduction = 0.000001,

learn_rate = 0.01,

sample_size = 1

)# took roughly 1 minute

system.time({

set.seed(2)

bout_boost_tune_first_stumps <- bout_boost_wflow %>%

tune_grid(

resamples = burnout_folds,

grid = first_grid_boost_burn_stumps,

metrics = regr_metrics

)

})

autoplot(bout_boost_tune_first_stumps) + theme_light()

show_best(bout_boost_tune_first_stumps)# took roughly 1 minute

system.time({

set.seed(2)

bout_boost_tune_first_depth6 <- bout_boost_wflow %>%

tune_grid(

resamples = burnout_folds,

grid = first_grid_boost_burn_depth6,

metrics = regr_metrics

)

})

# plot output is shown in the figure below

autoplot(bout_boost_tune_first_depth6) + theme_light()

show_best(bout_boost_tune_first_depth6)

Figure 5.2: Result of the first grid search for the XGBoost model (max depth 6).

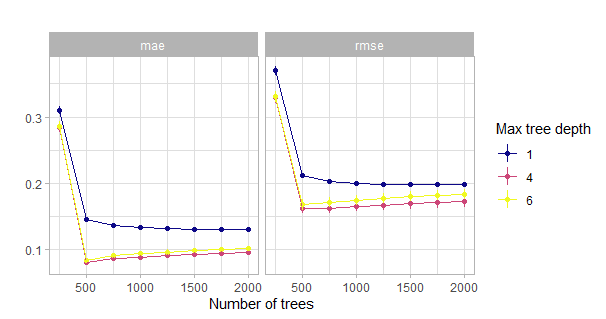

Compare the two different first tuning rounds:

# output of this code shown in the figure below

bout_boost_tune_first_stumps %>%

collect_metrics() %>%

mutate(tree_depth = 1) %>%

bind_rows(

bout_boost_tune_first_depth6 %>%

collect_metrics() %>%

mutate(tree_depth = 6)

) %>%

ggplot(aes(x = trees, y = mean, col = factor(tree_depth))) +

geom_point() +

geom_line() +

geom_linerange(aes(ymin = mean - std_err, ymax = mean + std_err)) +

labs(col = "Max tree depth", y = "", x = "Number of trees",

title = "") +

scale_color_viridis_d(option = "C") +

facet_wrap(~ .metric) +

theme_light()

Figure 5.3: Comparison of the initial grid search for the number of trees w.r.t. the maximum tree depth. Actually with tiny confidence bands around the estimates.

This shows that the model with just stumps converges much slower or may even never reach the low level of when using deeper trees. One reason for this could be that these stumps do not account for interactions and of course the same number of deep trees encode much more information than the same number of stumps.

So 1500 trees should suffice here. Thus the workflow and model will be adjusted accordingly.

# fix the number of trees by redefining the boosted model with a

# fixed number of trees.

bout_boost_model <- boost_tree(trees = 1500,

learn_rate = tune(),

loss_reduction = tune(),

tree_depth = tune(),

mtry = tune(),

sample_size = tune(),

stop_iter = 10) %>%

set_engine("xgboost") %>%

set_mode("regression")

# update the workflow

bout_boost_wflow <-

bout_boost_wflow %>%

update_model(bout_boost_model)

bout_boost_params <- bout_boost_wflow %>%

parameters() %>%

finalize(burnout_train)

# reduced hyperparameter space

bout_boost_params## Collection of 5 parameters for tuning

##

## identifier type object

## mtry mtry nparam[+]

## tree_depth tree_depth nparam[+]

## learn_rate learn_rate nparam[+]

## loss_reduction loss_reduction nparam[+]

## sample_size sample_size nparam[+]Now perform the first major grid search over all the other hyperparameters.

# now tune all the remaining hyperparameters with a large space filling grid

# took roughly 1.5 hours

system.time({

set.seed(2)

bout_boost_tune_second <- bout_boost_wflow %>%

tune_grid(

resamples = burnout_folds,

grid = bout_boost_params %>%

grid_latin_hypercube(

size = 200),

metrics = regr_metrics

)

})

show_best(bout_boost_tune_second, metric = "rmse")Now refine the grid i.e. the parameter space according to the results of the last grid search and perform a third one.

# now tune all the remaining hyperparameters with a refined space filling grid

# took roughly 2 hours

system.time({

set.seed(2)

bout_boost_tune_third <- bout_boost_wflow %>%

tune_grid(

resamples = burnout_folds,

grid = bout_boost_params %>%

update(

mtry = mtry(c(5,9)),

tree_depth = tree_depth(c(4,5)),

learn_rate = learn_rate(c(-1.7, -1.3)),

loss_reduction = loss_reduction(c(-8,-3)),

sample_size = sample_prop(c(0.4, 0.9))

) %>%

grid_latin_hypercube(

size = 200),

metrics = regr_metrics

)

})

show_best(bout_boost_tune_third, metric = "rmse")With this final grid search one is ready to finalize the model.

The results of the three grid searches suggest that no column-subsampling should be applied, the maximum tree depth should be 4, the learning rate should be small but not extremely small (\(\sim 0.02\)), the loss reduction regularization effect is not needed here (very small) and the sample size for each tree should be set to 0.8.

final_bout_boost_wflow <-

bout_boost_wflow %>%

finalize_workflow(tibble(

mtry = 9,

tree_depth = 4,

learn_rate = 0.02,

loss_reduction = 0.0000003,

sample_size = 0.8

)) %>%

fit(burnout_train)Now tune the transformed outcome boosting model.

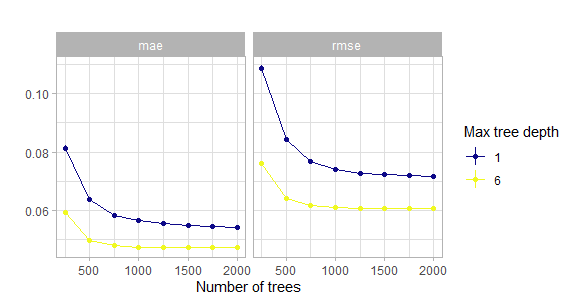

Again start with the trees.

first_grid_boost_burn_trans <- crossing(

trees = seq(250, 2000, 250),

mtry = 9,

tree_depth = 6,

loss_reduction = 0.000001,

learn_rate = 0.01,

sample_size = 1

)# took roughly 1 minute

system.time({

set.seed(2)

bout_boost_tune_first_trans <- bout_boost_wflow_trans %>%

tune_grid(

resamples = burnout_folds,

grid = first_grid_boost_burn_trans,

metrics = regr_metrics

)

})

# plot output in the figure below

autoplot(bout_boost_tune_first_trans) + theme_light()

show_best(bout_boost_tune_first_trans)

Figure 5.4: Result of the first grid search for the XGBoost model with transformed outcome.

Again 1500 trees should easily suffice.

# fix the number of trees by redefining the boosted model with a

# fixed number of trees.

bout_boost_model_trans <- boost_tree(trees = 1500,

learn_rate = tune(),

loss_reduction = tune(),

tree_depth = tune(),

mtry = tune(),

sample_size = tune(),

stop_iter = 10) %>%

set_engine("xgboost") %>%

set_mode("regression")

# update the workflow

bout_boost_wflow_trans <-

bout_boost_wflow_trans %>%

update_model(bout_boost_model_trans)

bout_boost_params_trans <- bout_boost_wflow_trans %>%

parameters() %>%

finalize(burnout_train)

# reduced hyperparameter space

bout_boost_params_trans## Collection of 5 parameters for tuning

##

## identifier type object

## mtry mtry nparam[+]

## tree_depth tree_depth nparam[+]

## learn_rate learn_rate nparam[+]

## loss_reduction loss_reduction nparam[+]

## sample_size sample_size nparam[+]Now perform the first major grid search over all the other hyperparameters and the second one overall.

# now tune all the remaining hyperparameters with a large space filling grid

# took roughly 2 hours

system.time({

set.seed(2)

bout_boost_tune_second_trans <- bout_boost_wflow_trans %>%

tune_grid(

resamples = burnout_folds,

grid = bout_boost_params_trans %>%

grid_latin_hypercube(

size = 200),

metrics = regr_metrics

)

})

show_best(bout_boost_tune_second_trans, metric = "rmse")From the tuning results one can conclude that in this setting actually the same hyperparameter setting as for the raw target variable seems appropriate. So one finalizes the workflow with the same hyperparameters and fits the model.

final_bout_boost_wflow_trans <-

bout_boost_wflow_trans %>%

finalize_workflow(tibble(

mtry = 9,

tree_depth = 4,

learn_rate = 0.02,

loss_reduction = 0.0000003,

sample_size = 0.8

)) %>%

fit(burnout_train)Now all models w.r.t. the burnout data set are fitted.

5.1.4 Evaluate and understand the model

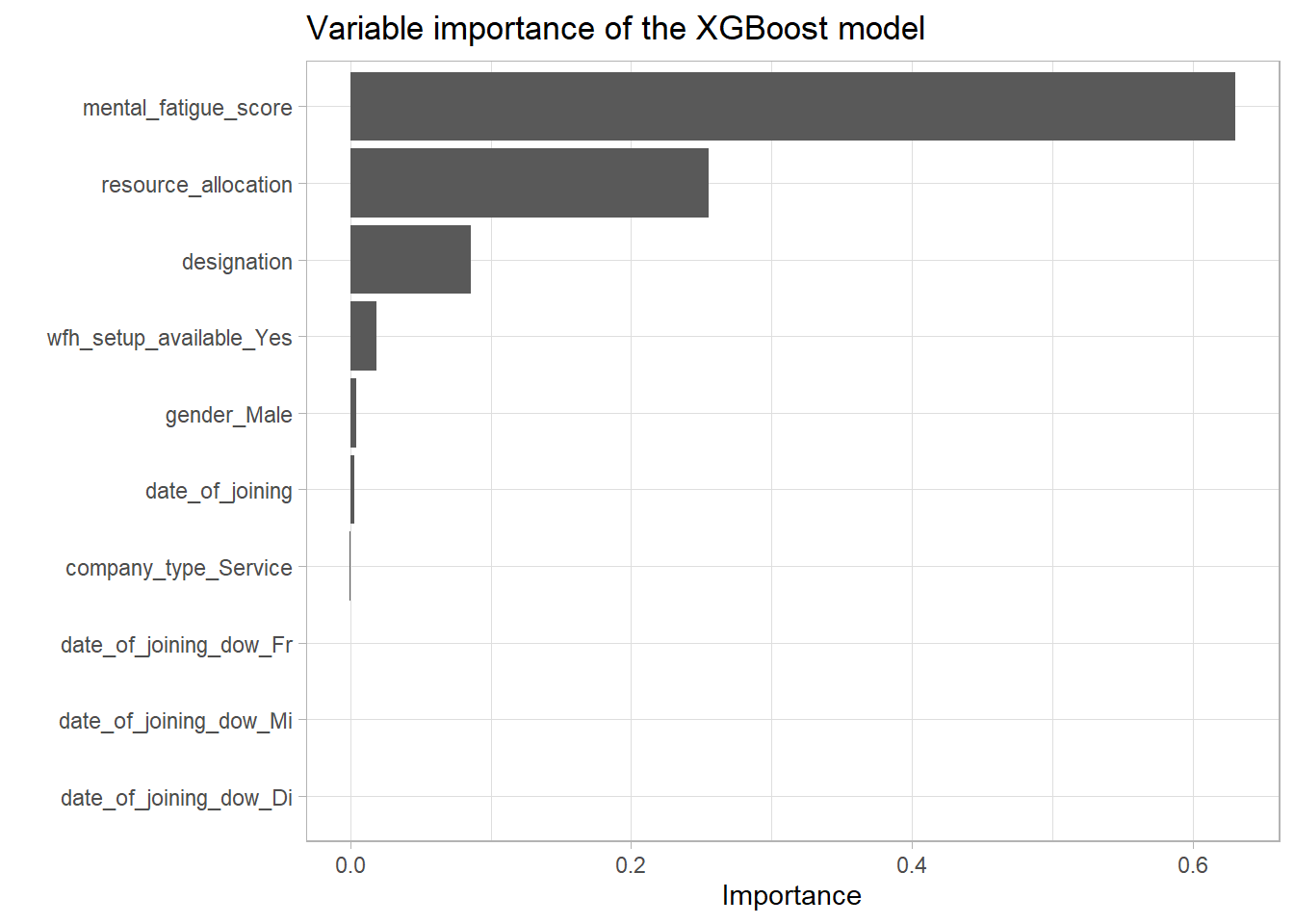

First a visualization of the variable importance. The variable importance is basically calculated by measuring the gain w.r.t. the loss of each feature in the single trees and splits.[1]

For a single tree

The feature importance for the tree \(t\) and the feature \(X_l\) is given by:

\[ \mathcal{I}_l^2(t) = \sum_{j \in \{\text{Internal nodes}\}} \mathcal{i}_j^2\; I(\text{splitting variable}=X_l) \] Where \(i_j^2\) is the estimated improvement in squared error of the split at node \(j\) to stop splitting at this node.

For the full ensemble

\[ \mathcal{I}_l^2 = \frac{1}{K} \sum_{k = 1}^K \mathcal{I}_l^2(t_k) \]

vip_burn <- final_bout_boost_wflow %>%

pull_workflow_fit() %>%

vip::vip() +

theme_light() +

labs(title = "Variable importance of the XGBoost model")

vip_burn

ggsave("_pictures/vip_burn.png",plot = vip_burn)

remove(vip_burn)This shows that indeed the mental_fatigue_score is the most influential predictor by far followed by the ressource_allocation and designation features. These results are not at all surprising as the EDA exactly came to these conclusions. Especially the very few appearances of the features connected to company_type and date_of_joining are most likely just some minor overfitting.

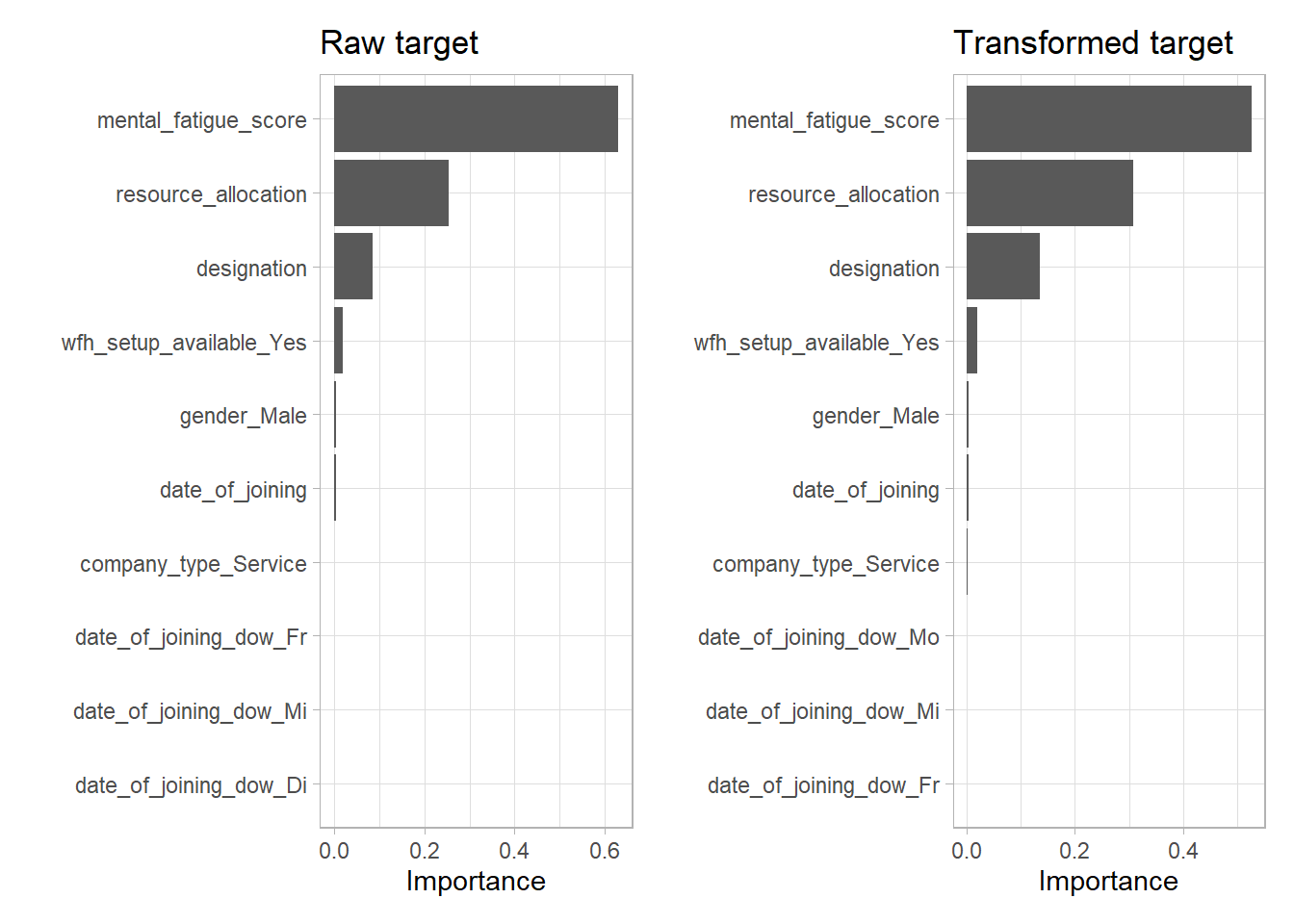

Now compare the variable importance of the model with the raw and the transformed target variable.

One can observe the exact same ordering. The only difference seems to be a higher influence of the designation variable for the transformed target. This is not surprising as the resource allocation and designation variable are highly correlated.

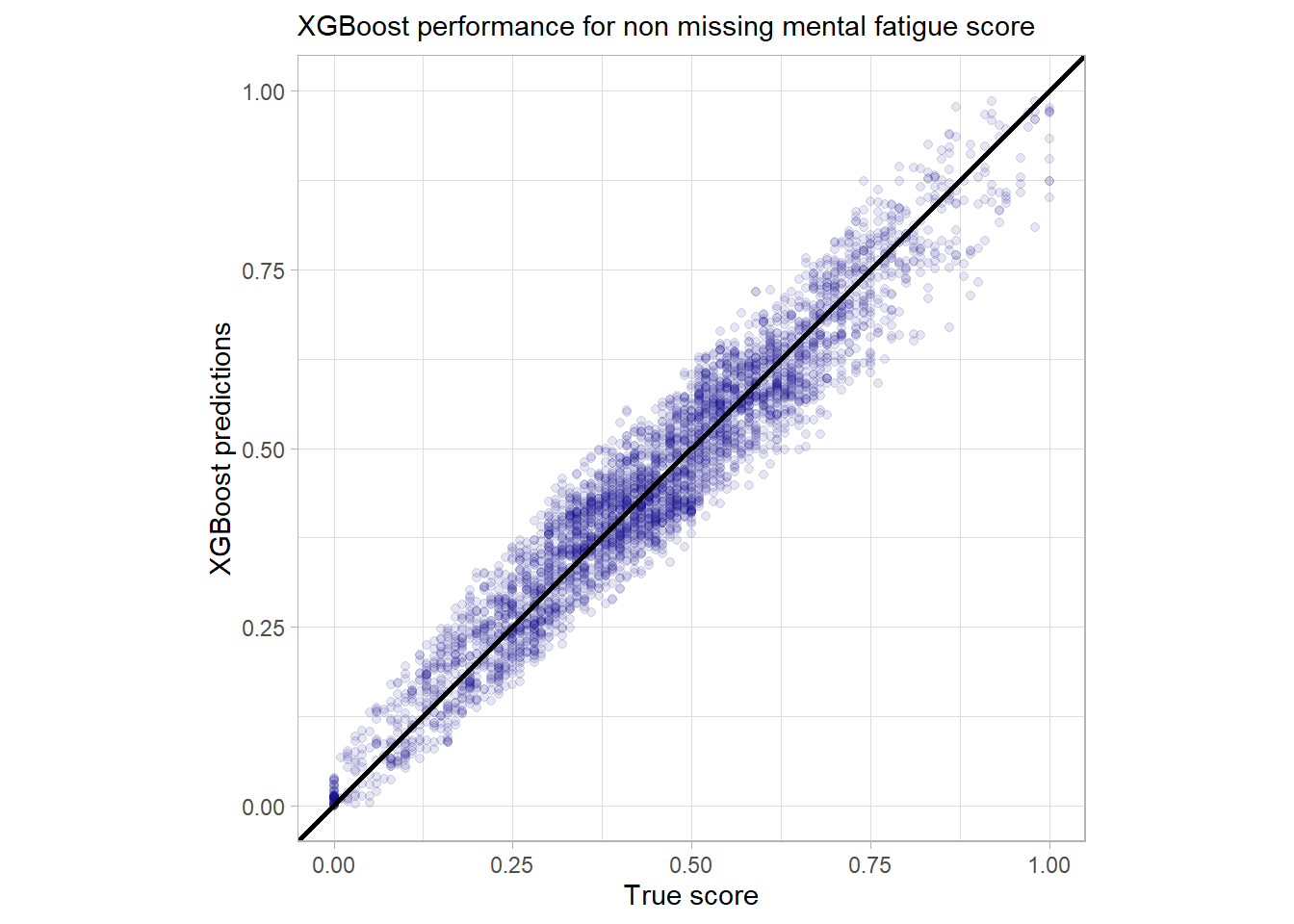

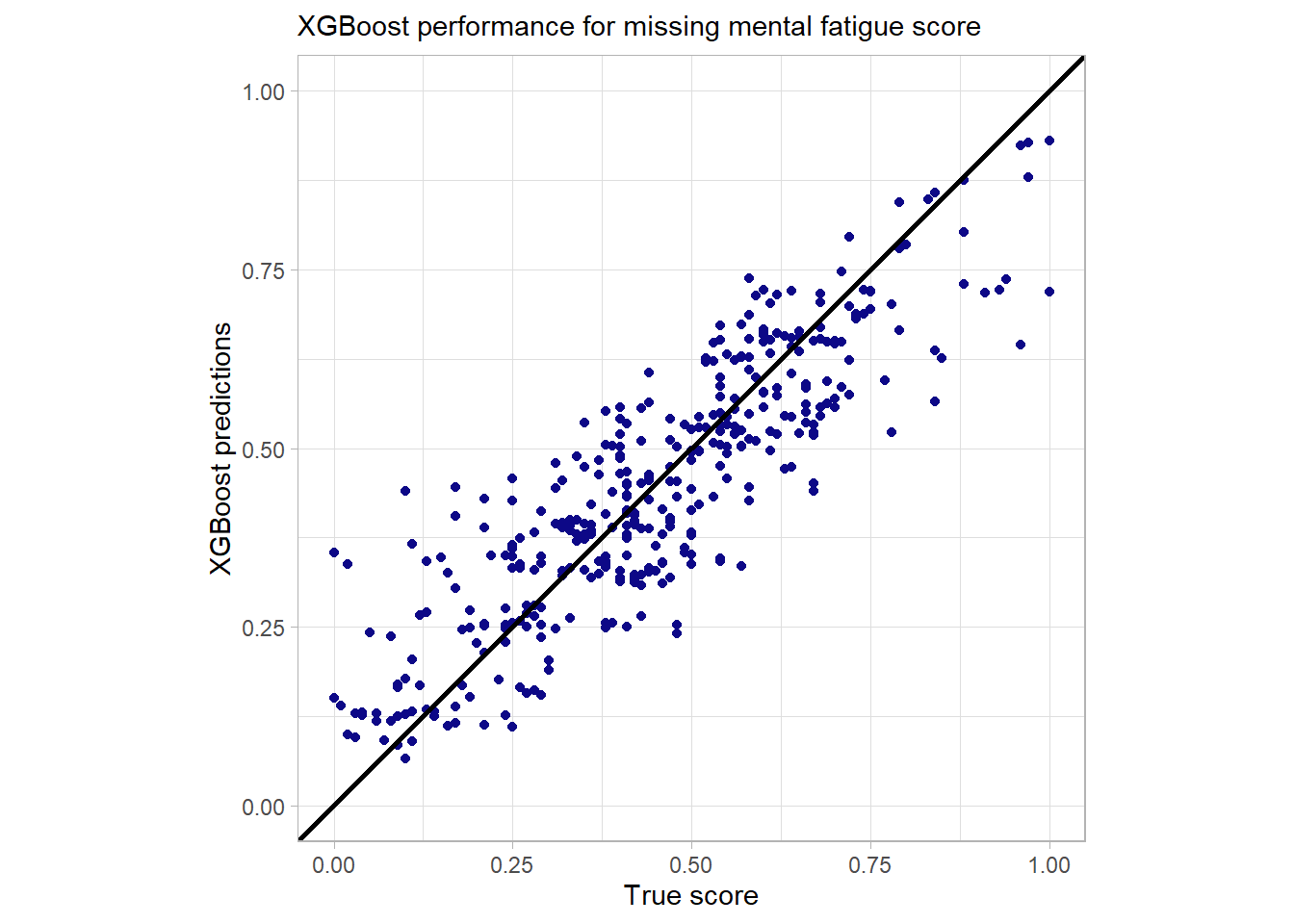

Now have a look at the performance of the main boosting model w.r.t. missing values in the most influential variable.

miss_vals_burn_no <- burnout_test %>%

mutate(boost_pred = predict(final_bout_boost_wflow,

new_data = .)[[".pred"]]) %>%

filter(!is.na(mental_fatigue_score)) %>%

ggplot(aes(x = burn_rate, y = boost_pred)) +

geom_point(col = plasma(1), alpha = 0.1, na.rm = TRUE) +

geom_abline(slope = 1, intercept = 0, size = 1) +

xlim(0,1) + ylim(0,1) +

theme_light() +

labs(y = "XGBoost predictions",

x = "True score",

title = "XGBoost performance for non missing mental fatigue score") +

coord_equal() +

theme(plot.title = ggtext::element_markdown(size = 11))

miss_vals_burn_no

miss_vals_burn_yes <- burnout_test %>%

mutate(boost_pred = predict(final_bout_boost_wflow,

new_data = .)[[".pred"]]) %>%

filter(is.na(mental_fatigue_score)) %>%

ggplot(aes(x = burn_rate, y = boost_pred)) +

geom_point(col = plasma(1)) +

geom_abline(slope = 1, intercept = 0, size = 1) +

xlim(0,1) + ylim(0,1) +

theme_light() +

labs(y = "XGBoost predictions",

x = "True score",

title = "XGBoost performance for missing mental fatigue score") +

coord_equal() +

theme(plot.title = ggtext::element_markdown(size = 11))

miss_vals_burn_yes

The performance for the missing values is indeed not that precise but still quite good. There is at least no huge outlier detectable here. While at first glance the fact that outliers are handled naturally by the model is not astonishing it really is one the core strengths of the model to deal with messy data that includes missing and sparse data. So there is no need for imputation or a second fallback model for missing values.

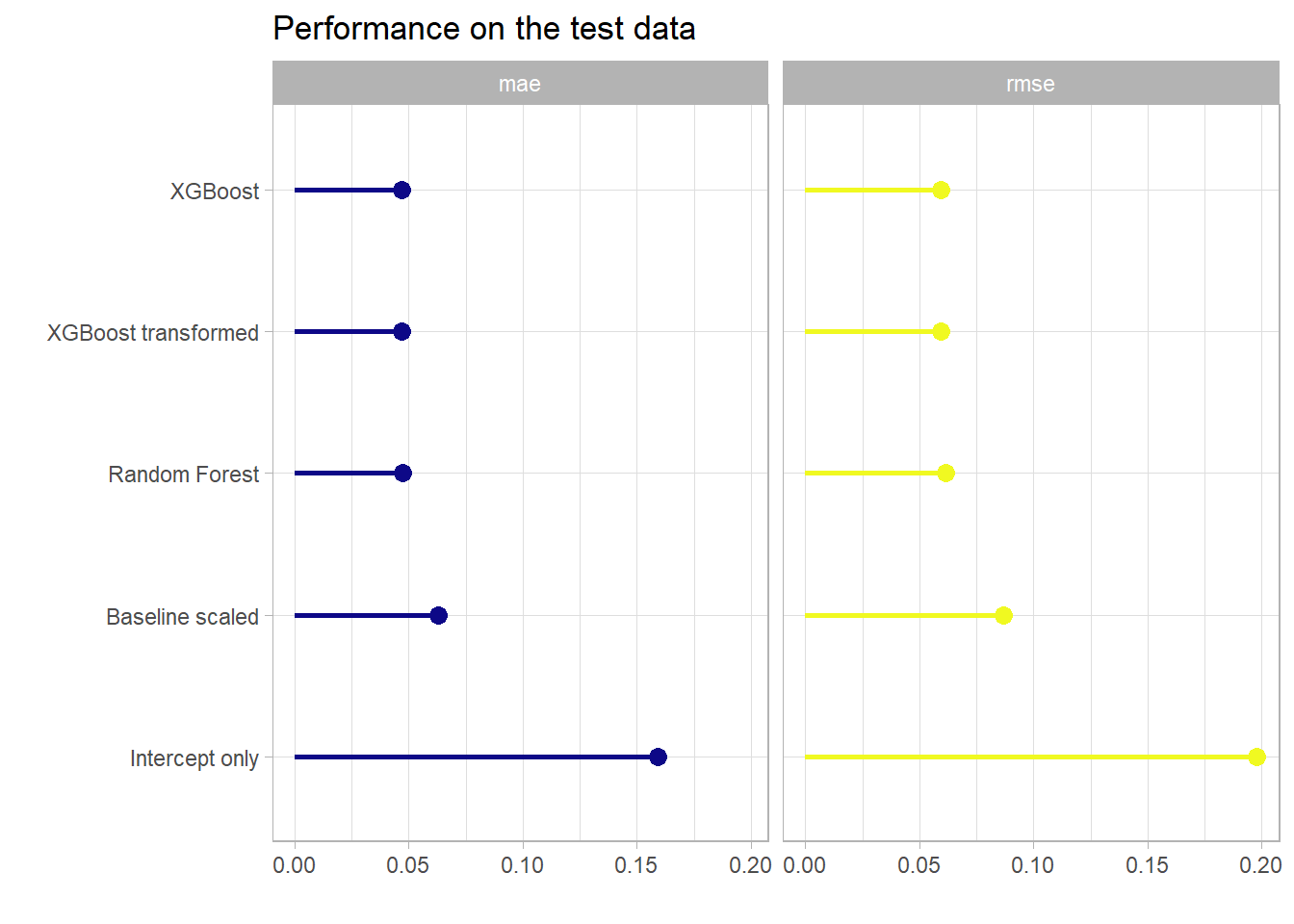

Now it is interesting to check which model performed the best on the test data set. The results can be viewed below in tabular and graphical form.

# calculate the mae and the rmse for all models (random forest and xgboost)

bout_test_perf <- burnout_test %>%

mutate(rf_pred = predict(final_bout_rf_wflow,

new_data = .)[[".pred"]],

boost_pred = predict(final_bout_boost_wflow,

new_data = .)[[".pred"]],

boost_trans_pred = predict(final_bout_boost_wflow_trans,

new_data = .)[[".pred"]],

# transform the predictions back

boost_trans_pred = (2 / (exp(-boost_trans_pred) + 1)) - 0.5,

intercept_pred = bout_predict_trivial_mean(.),

mfs_scored_pred = bout_predict_trivial_mfs(.)) %>%

mutate(across(ends_with("pred"), function(col) {

case_when(

col < 0 ~ 0,

col > 1 ~ 1,

TRUE ~ col

)

})) %>%

select(burn_rate, rf_pred, boost_pred, boost_trans_pred,

intercept_pred, mfs_scored_pred) %>%

pivot_longer(-burn_rate, names_to = "model") %>%

group_by(model) %>%

summarise(

mae = mae_vec(

truth = burn_rate,

estimate = value

),

rmse = rmse_vec(

truth = burn_rate,

estimate = value

)

) %>%

arrange(rmse)

knitr::kable(bout_test_perf, digits = 4,

booktabs = TRUE,

caption = "Performance on the test data")| model | mae | rmse |

|---|---|---|

| boost_trans_pred | 0.0467 | 0.0592 |

| boost_pred | 0.0467 | 0.0593 |

| rf_pred | 0.0474 | 0.0612 |

| mfs_scored_pred | 0.0628 | 0.0870 |

| intercept_pred | 0.1593 | 0.1980 |

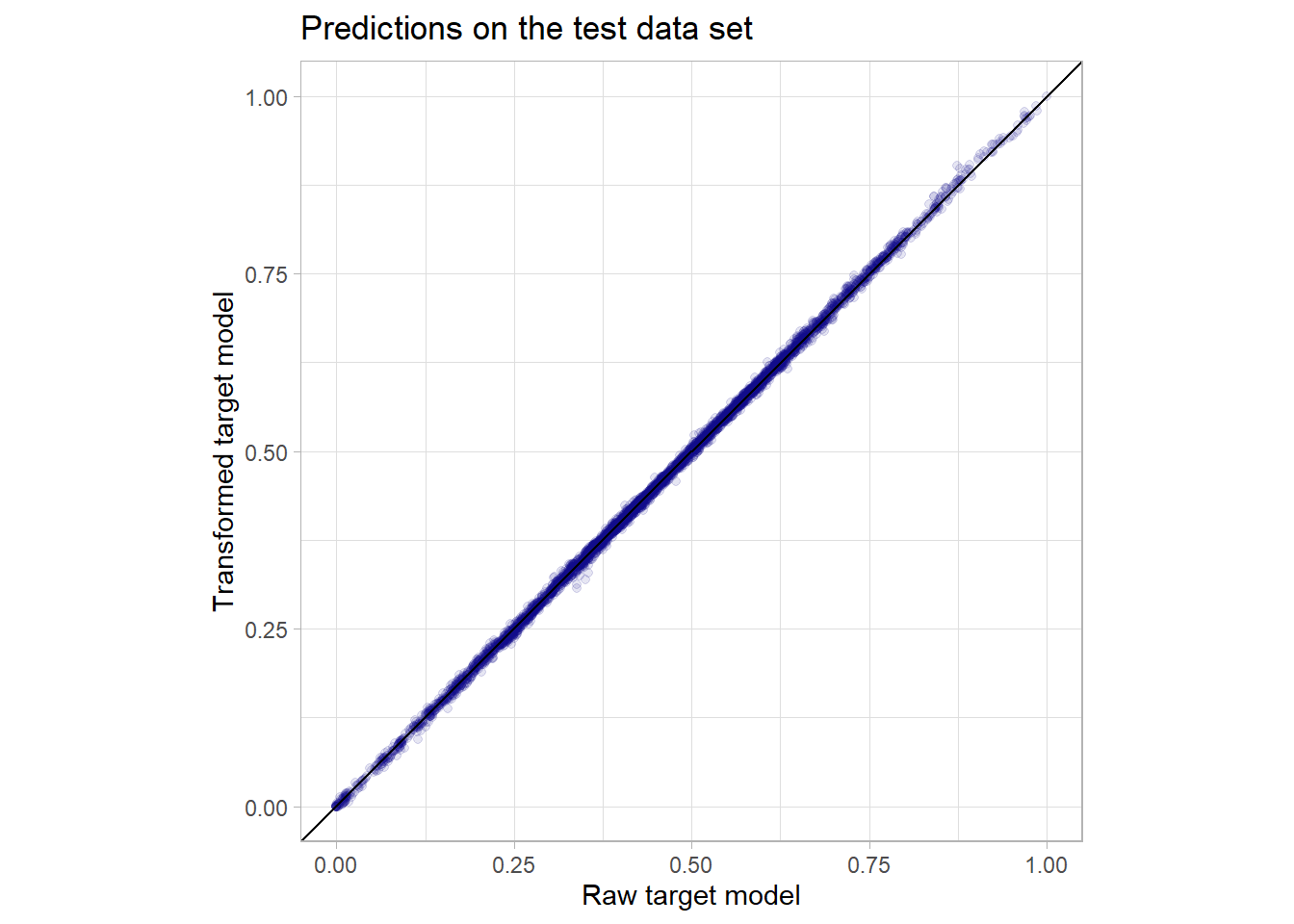

It can be observed that already the really simple baseline model that just scales the mental fatigue score (so it reflects just a single linear influence) has a really low MAE and RMSE. Still both random forest as well as the boosting model can further improve this metric but the huge linear influence of the mental fatigue score obviously leaves not much room for improvement. By the way the simple linear model with just this one predictor has already an \(R^2\) of more than 0.92. XGBoost manages to get a slightly better performance on the test data set but this would only be nice in a machine learning competition as in real life this difference would negligible. Actually in this use case for a real life application a very easy explainable linear model might be somewhat better than a complex model like XGBoost as the difference in performance is not too big. Nevertheless in my opinion the predictor mental_fatigue_score should be treated with extreme care as in a real life situation the collection of this score could be as costly or hard as the one of the outcome. There might be even latent variables that are highly linearly correlated to both scores. But this data was not intended to be used in a real life application but was shared at a machine learning competition and actually many of the best submissions there used XGBoost models. The transformation of the outcome variable did actually not change the predictive power of the model as the result for both the model with the raw target as well as the one with the transformed one performed equally good. Below one can see a scatterplot comparing the individual predictions of these two models on the test set which perfectly underlines the hypothesis that the models are basically the same as there is almost no variation around the identity slope.

So all in all for this data set it was not a way better predictive performance (if one is not in an artificial machine learning setup) that was the core strength of the tree-based gradient boosting model but the minimal pre-processing and exploratory work (for example no interactions have to be detected manually or be tested for significance) that was needed and the natural handling of missing values to achieve the model. This of course came to a quite high computational price. The famous predictive performance could probably be displayed better when having a data set with more complex non-linear patterns.

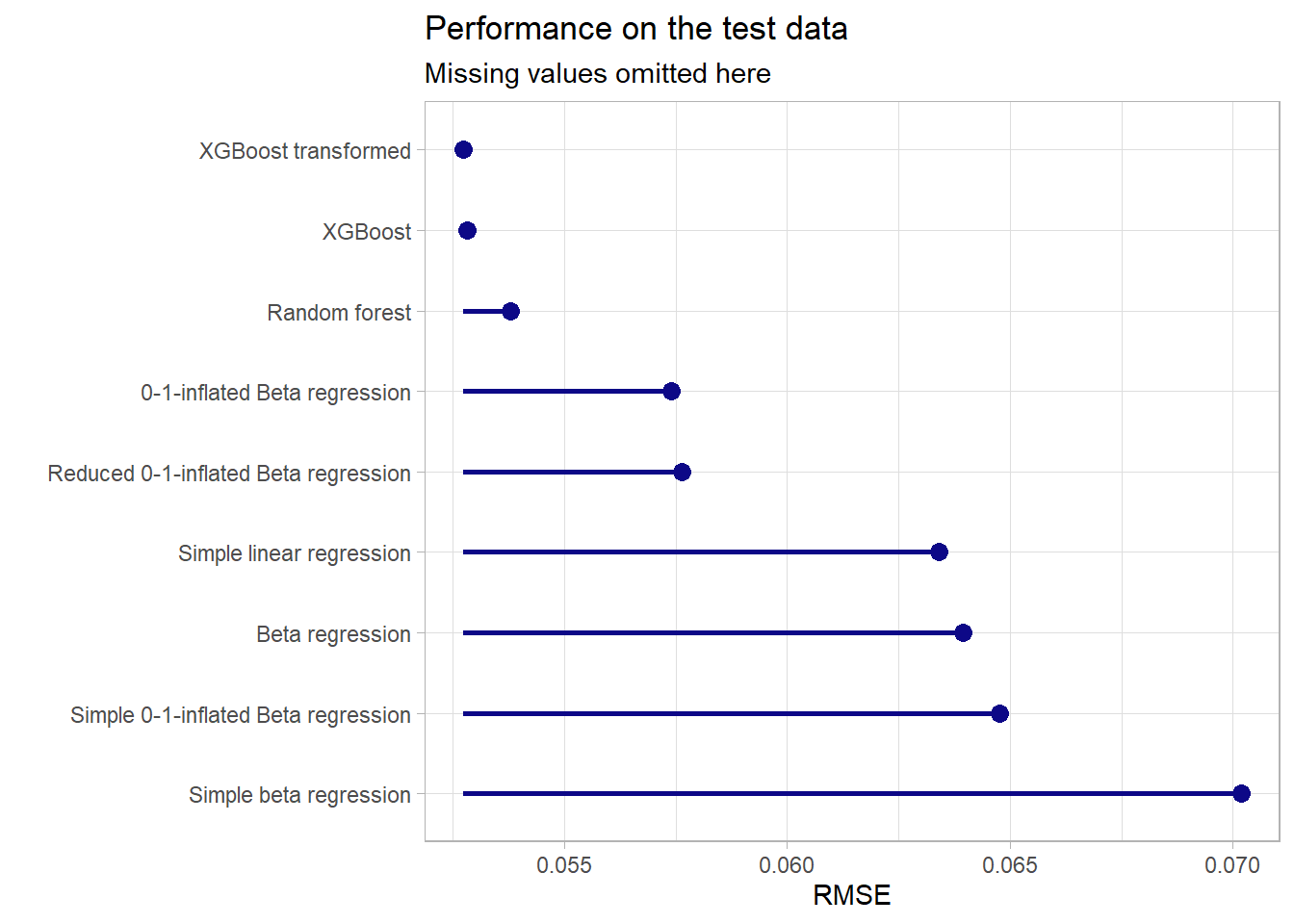

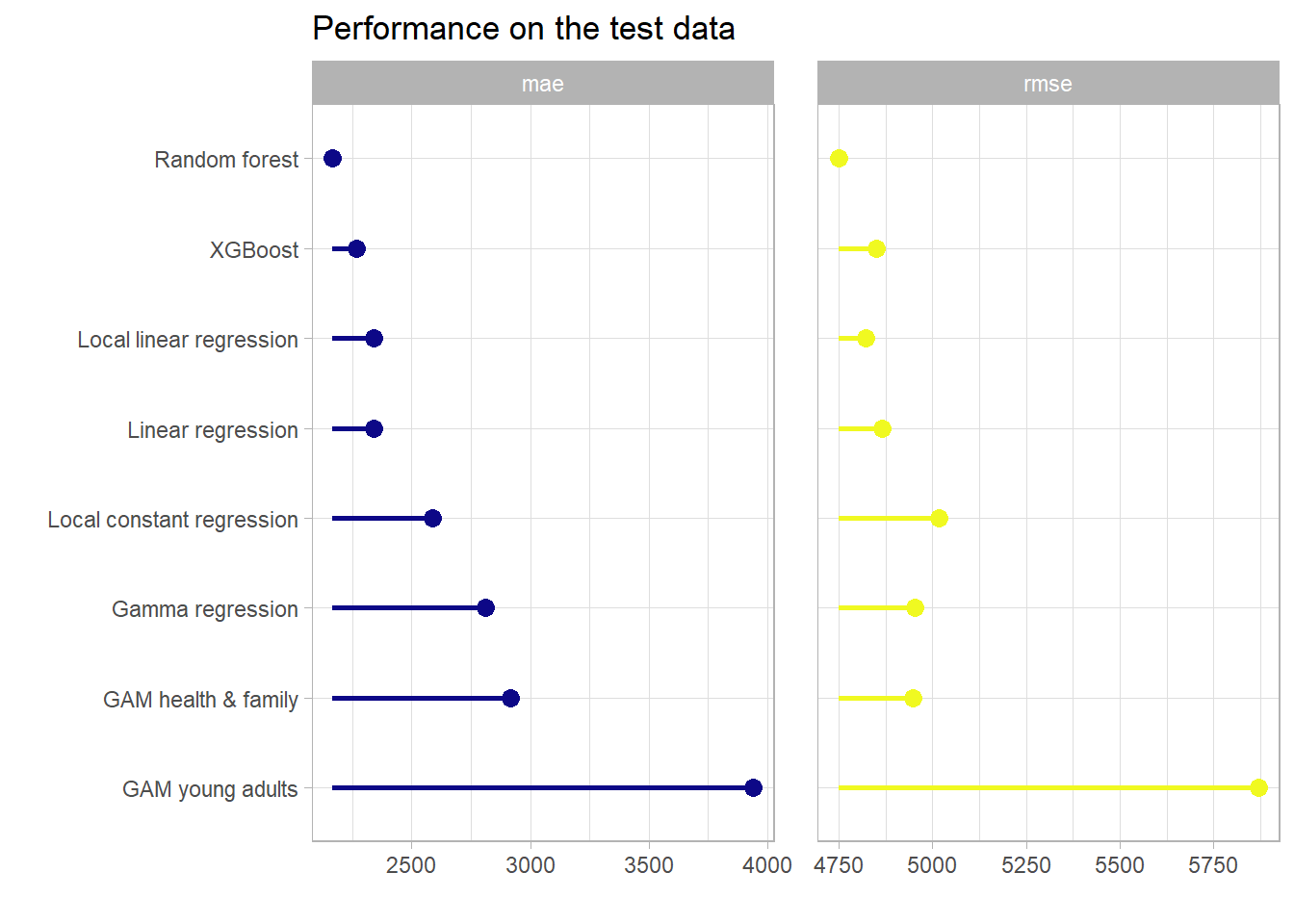

Below one can find a comparison between the models presented here and the models that other participants of the seminar proposed. The respective model formulas and model types will not be discussed in detail at this point.

######################################################

# Visualize the results of the other seminar

# participants in order to compare the predictive results

# Burnout data set

######################################################

# omit missing values here in order to have the exact same test data set

bout_test_perf_on_nonmissing <- burnout_test %>%

drop_na() %>%

mutate(rf_pred = predict(final_bout_rf_wflow,

new_data = .)[[".pred"]],

boost_pred = predict(final_bout_boost_wflow,

new_data = .)[[".pred"]],

boost_trans_pred = predict(final_bout_boost_wflow_trans,

new_data = .)[[".pred"]],

# transform the predictions back

boost_trans_pred = (2 / (exp(-boost_trans_pred) + 1)) - 0.5,

intercept_pred = bout_predict_trivial_mean(.),

mfs_scored_pred = bout_predict_trivial_mfs(.)) %>%

mutate(across(ends_with("pred"), function(col) {

case_when(

col < 0 ~ 0,

col > 1 ~ 1,

TRUE ~ col

)

})) %>%

select(burn_rate, rf_pred, boost_pred, boost_trans_pred,

intercept_pred, mfs_scored_pred) %>%

pivot_longer(-burn_rate, names_to = "model") %>%

group_by(model) %>%

summarise(

mae = mae_vec(

truth = burn_rate,

estimate = value

),

rmse = rmse_vec(

truth = burn_rate,

estimate = value

)

) %>%

arrange(rmse)

bout_test_perf_on_nonmissing %>%

select(-mae) %>%

# here the calculated rmse can be just appended as the same train

# test split was used, the models below were proposed by Ferdinand

# Buchner

bind_rows(

tribble(~model, ~rmse,

"Simple beta regression", 0.07017522,

"Beta regression", 0.06394935,

"Simple 0-1-inflated Beta regression",0.06475547,

"0-1-inflated Beta regression", 0.05739319,

"Reduced 0-1-inflated Beta regression", 0.05763967)

) %>%

mutate(model = as.factor(model),

model = fct_recode(model,

"XGBoost" = "boost_pred",

"XGBoost transformed" = "boost_trans_pred",

"Random forest" = "rf_pred",

"Simple linear regression" =

"mfs_scored_pred",

"Intercept only" = "intercept_pred"),

model = fct_reorder(model, -rmse)) %>%

filter(model != "Intercept only") %>%

arrange(rmse) %>%

ggplot(aes(x = rmse, y = model)) +

geom_point(shape = 16, size = 3, col = plasma(1)) +

geom_segment(aes(y = model, yend = model,

x = min(rmse), xend = rmse),

size = 1, col = plasma(1)) +

scale_color_viridis_d(option = "C") +

labs(x = "RMSE",y = "", title = "Performance on the test data",

subtitle = "Missing values omitted here") +

theme_light() +

theme(legend.position = "None")

This finishes the analysis of the burnout data set. But no worries there is still one data set and boosting model left to explore namely the insurance data set.

5.2 Insurance data

5.2.1 Baseline models

The first thing is to set up the trivial baseline models.

# the trivial intercept only model:

ins_predict_trivial_mean <- function(new_data) {

rep(mean(ins_train$log10_charges), nrow(new_data))

}

# the trivial linear model without any interactions (as we do not have

# missing values does not have to be dealt with them)

ins_baseline_lm <- lm(log10_charges ~ age + sex + bmi +

children + smoker + region,

data = ins_train %>%

mutate(across(where(is.character), as.factor)))

summary(ins_baseline_lm)##

## Call:

## lm(formula = log10_charges ~ age + sex + bmi + children + smoker +

## region, data = ins_train %>% mutate(across(where(is.character),

## as.factor)))

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.46395 -0.08466 -0.01955 0.02643 0.93557

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 3.0685262 0.0357167 85.913 < 2e-16 ***

## age 0.0149190 0.0004316 34.566 < 2e-16 ***

## sexmale -0.0251970 0.0119527 -2.108 0.035260 *

## bmi 0.0052551 0.0010243 5.130 3.44e-07 ***

## children 0.0413979 0.0050235 8.241 5.00e-16 ***

## smokeryes 0.6841462 0.0147963 46.238 < 2e-16 ***

## regionnorthwest -0.0178125 0.0172137 -1.035 0.301003

## regionsoutheast -0.0613744 0.0172229 -3.564 0.000382 ***

## regionsouthwest -0.0512729 0.0172868 -2.966 0.003084 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.1945 on 1061 degrees of freedom

## Multiple R-squared: 0.764, Adjusted R-squared: 0.7622

## F-statistic: 429.4 on 8 and 1061 DF, p-value: < 2.2e-16The predictions of these baseline models on the test data set will be compared with the predictions of the tree-based models that will be constructed.

5.2.2 Model specification

# Models:

# Random forest model for comparison

ins_rf_model <- rand_forest(trees = tune(),

mtry = tune()) %>%

set_engine("ranger") %>%

set_mode("regression")

# XGBoost model

ins_boost_model <- boost_tree(trees = tune(),

learn_rate = tune(),

loss_reduction = tune(),

tree_depth = tune(),

mtry = tune(),

sample_size = tune(),

stop_iter = 10) %>%

set_engine("xgboost") %>%

set_mode("regression")# Workflows: model + recipe

ins_rf_wflow <-

workflow() %>%

add_model(ins_rf_model) %>%

add_recipe(ins_rec_rf)

ins_boost_wflow <-

workflow() %>%

add_model(ins_boost_model) %>%

add_recipe(ins_rec_boost)5.2.3 Tuning

For the hyperparameter tuning one needs validation sets to monitor the models on unseen data. To do this 5-fold cross validation (CV) is used here.

# Create Resampling object

set.seed(2)

ins_folds <- vfold_cv(ins_train, v = 5)Now the parameter objects will be fixed for the grid searches analogously to above.

ins_rf_params <- ins_rf_wflow %>%

parameters() %>%

update(trees = trees(c(100, 2000))) %>%

finalize(ins_train)

ins_rf_params %>% pull_dials_object("trees")## # Trees (quantitative)

## Range: [100, 2000]ins_rf_params %>% pull_dials_object("mtry")## # Randomly Selected Predictors (quantitative)

## Range: [1, 8]ins_boost_params <- ins_boost_wflow %>%

parameters() %>%

update(trees = trees(c(100, 2000))) %>%

finalize(ins_train)

ins_boost_params## Collection of 6 parameters for tuning

##

## identifier type object

## mtry mtry nparam[+]

## trees trees nparam[+]

## tree_depth tree_depth nparam[+]

## learn_rate learn_rate nparam[+]

## loss_reduction loss_reduction nparam[+]

## sample_size sample_size nparam[+]The random forest model goes first with the tuning.

Here there are as seen above only two hyperparameters to be tuned thus 30 combinations should suffice.

# took roughly 2 minutes

system.time({

set.seed(2)

ins_rf_tune <- ins_rf_wflow %>%

tune_grid(

resamples = ins_folds,

grid = ins_rf_params %>%

grid_latin_hypercube(size = 30, original = FALSE),

metrics = regr_metrics

)

})

# visualization of the tuning results (snapshot of the output below)

autoplot(ins_rf_tune) + theme_light()

# this functions shows the best combinations wrt the rmse metric of all the

# combinations in the grid

show_best(ins_rf_tune, metric = "rmse")

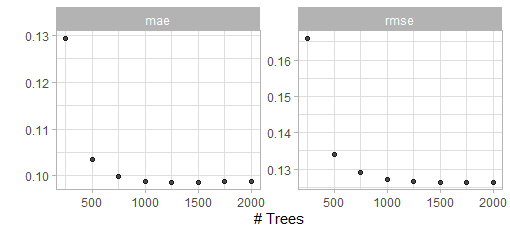

Figure 5.5: Result of a spacefilling grid search for the random forest model.

The visualization alongside the best performing results suggest that a value of mtryof 4 and 1000 trees should give good results. Thus one can finalize and fit this model.

final_ins_rf_wflow <-

ins_rf_wflow %>%

finalize_workflow(tibble(

trees = 1000,

mtry = 4

)) %>%

fit(ins_train)Now the boosting model.

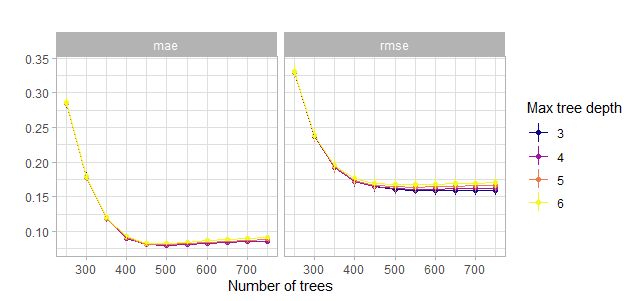

Again one starts to tune over the number of trees first (this time we also use three different tree depths to visualize the impact of this parameter too)

first_grid_boost_ins <- crossing(

trees = seq(250, 2000, 250),

mtry = 8,

tree_depth = c(1, 4, 6),

loss_reduction = 0.000001,

learn_rate = 0.01,

sample_size = 1

)# took roughly half a minute

system.time({

set.seed(2)

ins_boost_tune_first <- ins_boost_wflow %>%

tune_grid(

resamples = ins_folds,

grid = first_grid_boost_ins,

metrics = regr_metrics

)

})

show_best(ins_boost_tune_first)# visualize the tuning results (output in the figure below)

ins_boost_tune_first %>%

collect_metrics() %>%

ggplot(aes(x = trees, y = mean, col = factor(tree_depth))) +

geom_point() +

geom_line() +

geom_linerange(aes(ymin = mean - std_err, ymax = mean + std_err)) +

labs(col = "Max tree depth", y = "", x = "Number of trees",

title = "") +

scale_color_viridis_d(option = "C") +

facet_wrap(~ .metric) +

theme_light()

Figure 5.6: Comparison of the initial grid search for the number of trees w.r.t. the maximum tree depth. Actually with tiny confidence bands around the estimates.

This visualization clearly shows that one has to have a closer look at the region around 500 trees. More than 500 trees might lead to overfitting as seen here.

second_grid_boost_ins <- crossing(

trees = seq(250, 750, 50),

mtry = 8,

tree_depth = c(3, 4, 5, 6),

loss_reduction = 0.000001,

learn_rate = 0.01,

sample_size = 1

)# took roughly 15 seconds

system.time({

set.seed(2)

ins_boost_tune_second <- ins_boost_wflow %>%

tune_grid(

resamples = ins_folds,

grid = second_grid_boost_ins,

metrics = regr_metrics

)

})

show_best(ins_boost_tune_second)# visualize the tuning results (output in the figure below)

ins_boost_tune_second %>%

collect_metrics() %>%

ggplot(aes(x = trees, y = mean, col = factor(tree_depth))) +

geom_point() +

geom_line() +

geom_linerange(aes(ymin = mean - std_err, ymax = mean + std_err)) +

labs(col = "Max tree depth", y = "", x = "Number of trees",

title = "") +

scale_color_viridis_d(option = "C") +

facet_wrap(~ .metric) +

theme_light()

Figure 5.7: Comparison of the second grid search for the number of trees w.r.t. the maximum tree depth. Actually with tiny confidence bands around the estimates.

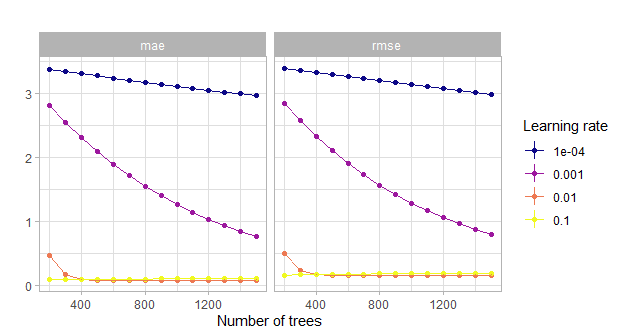

So from this visualization one can conclude that a number of trees of 600 should suffice to get a decent model. So the workflow will be updated and the number of trees fixed. After that the first major space filling grid search will be performed. Moreover with the previous visualizations in mind one can reduce the bound of the parameter space of the maximum tree depth quite a bit from 15 to 9. A minimum tree depth of 2 seems also appropriate. Before one does that mainly for a view on the influence of the learning rate one can tune one round only with the learning rate and some tree numbers for comparison.

# tune mainly learn rate

lrate_grid_boost_ins <- crossing(

trees = seq(200, 1500, 100),

mtry = 8,

tree_depth = c(3),

loss_reduction = c(0.000001),

learn_rate = c(0.1, 0.01, 0.001, 0.0001),

sample_size = 1

)# took roughly 20 seconds

system.time({

set.seed(2)

ins_boost_tune_lrate <- ins_boost_wflow %>%

tune_grid(

resamples = ins_folds,

grid = lrate_grid_boost_ins,

metrics = regr_metrics

)

})

show_best(ins_boost_tune_lrate)# visualize the tuning results (output in the figure below)

ins_boost_tune_lrate %>%

collect_metrics() %>%

ggplot(aes(x = trees, y = mean, col = factor(learn_rate))) +

geom_point() +

geom_line() +

geom_linerange(aes(ymin = mean - std_err, ymax = mean + std_err)) +

labs(x = "Number of trees", y = "", col = "Learning rate",

title = "") +

scale_color_viridis_d(option = "C") +

facet_wrap(~ .metric) +

theme_light()

Figure 5.8: Comparison of the grid search for the number of trees w.r.t. the learning rate. Actually with tiny confidence bands around the estimates.

This showcases the fact that indeed a learning rate that is too small can blow up the computational costs.

Now fix the number of the trees hyperparameter.

# fix the number of trees by redefining the boostin model with a

# fixed number of trees.

ins_boost_model <- boost_tree(trees = 600,

learn_rate = tune(),

loss_reduction = tune(),

tree_depth = tune(),

mtry = tune(),

sample_size = tune(),

stop_iter = 10) %>%

set_engine("xgboost") %>%

set_mode("regression")

# update the workflow

ins_boost_wflow <-

ins_boost_wflow %>%

update_model(ins_boost_model)

ins_boost_params <- ins_boost_wflow %>%

parameters() %>%

update(tree_depth = tree_depth(c(2, 9))) %>%

finalize(ins_train)

# reduced hyperparameter space

ins_boost_params## Collection of 5 parameters for tuning

##

## identifier type object

## mtry mtry nparam[+]

## tree_depth tree_depth nparam[+]

## learn_rate learn_rate nparam[+]

## loss_reduction loss_reduction nparam[+]

## sample_size sample_size nparam[+]# now tune all the remaining hyperparameters with a large space filling grid

# took roughly 10 minutes

system.time({

set.seed(2)

ins_boost_tune_major1 <- ins_boost_wflow %>%

tune_grid(

resamples = ins_folds,

grid = ins_boost_params %>%

grid_latin_hypercube(

size = 300),

metrics = regr_metrics

)

})

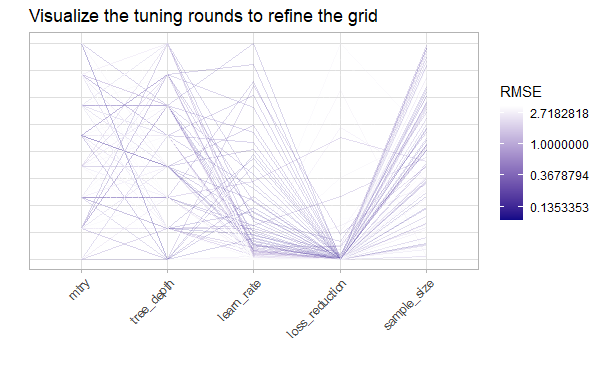

show_best(ins_boost_tune_major1, metric = "rmse")

Figure 5.9: Visualize the tuning results with a parallel coordinate plot. The y axis represents the scaled range for each of the hyperparameter spaces.

The most prominent suggestions of the visualizations and the table of the best performing ones are combinations of hyperparameters with a very low loss_reduction , a low learn_rate, a medium to high value of mtry, a not too big tree_depth as well as a rather high value for the sample_size.

These observations will be used to refine the search space and perform once more a space filling grid search.

# now tune all the remaining hyperparameters with a refined space filling grid

# took roughly 15 minutes

system.time({

set.seed(2)

ins_boost_tune_major2 <- ins_boost_wflow %>%

tune_grid(

resamples = ins_folds,

grid = ins_boost_params %>%

update(

mtry = mtry(c(6,8)),

tree_depth = tree_depth(c(3,4)),

learn_rate = learn_rate(c(-1.7, -1.5)),

loss_reduction = loss_reduction(c(-4, -1.5)),

sample_size = sample_prop(c(0.3, 0.9))

) %>%

grid_latin_hypercube(

size = 300),

metrics = regr_metrics

)

})

show_best(ins_boost_tune_major2, metric = "rmse")The results from this grid search were quite clear. One will further use a mtry value of 7, a tree_depth of 3, a learn_rate of 0.02, a loss_reduction of 0.03 and a sample_size of 0.8.

final_ins_boost_wflow <-

ins_boost_wflow %>%

finalize_workflow(tibble(

mtry = 7,

tree_depth = 3,

learn_rate = 0.02,

loss_reduction = 0.03,

sample_size = 0.8

)) %>%

fit(ins_train)From here no computational intensive task will be performed so one stops the cluster.

# stop cluster

stopCluster(cl)5.2.4 Evaluate and understand the model

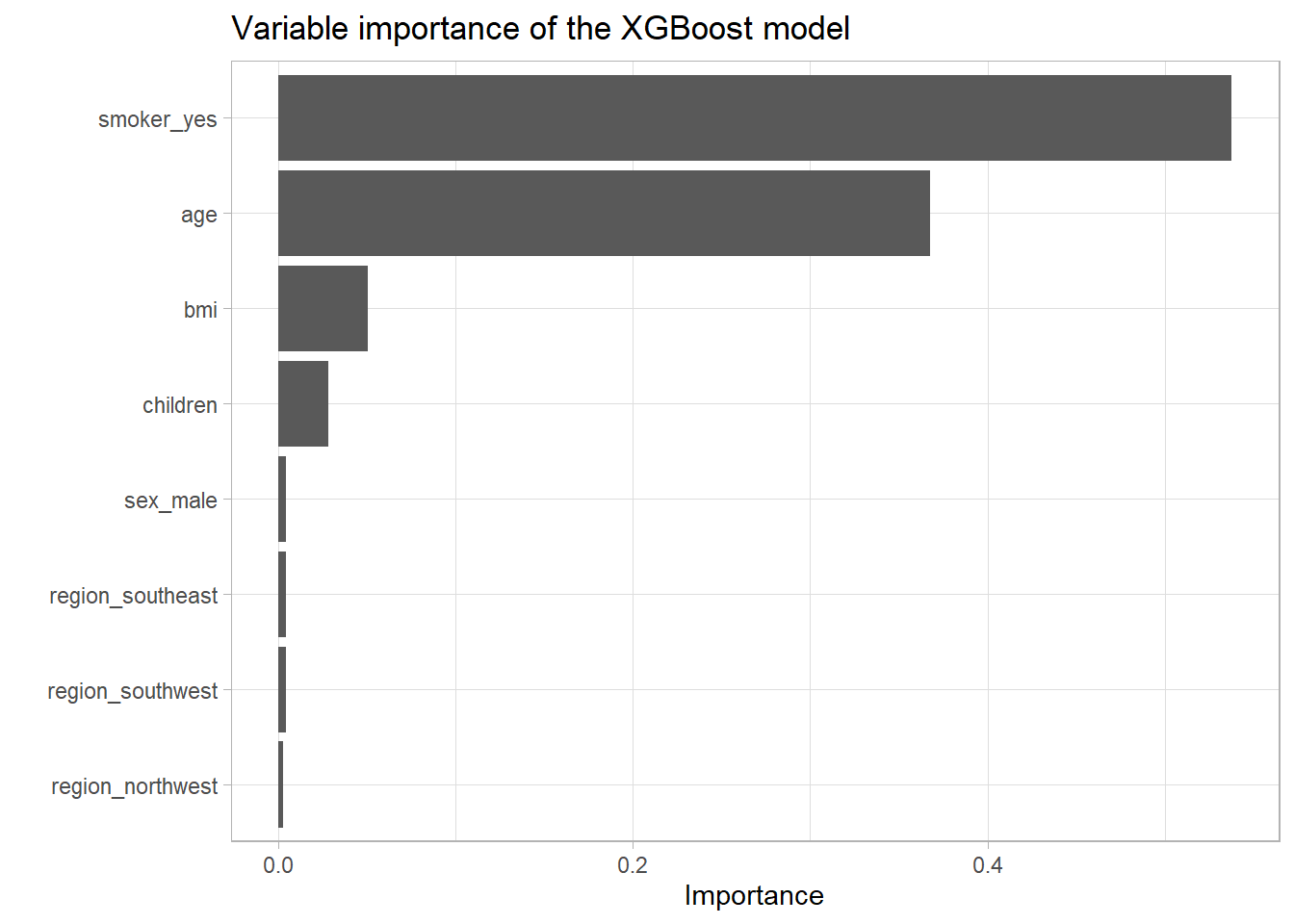

First a visualization of the variable importance. The variable importance is again calculated by measuring the gain w.r.t. the loss of each feature in the single trees and splits.

vip_ins <- final_ins_boost_wflow %>%

pull_workflow_fit() %>%

vip::vip() +

theme_light() +

labs(title = "Variable importance of the XGBoost model")

vip_ins

ggsave("_pictures/vip_ins.png", plot = vip_ins)

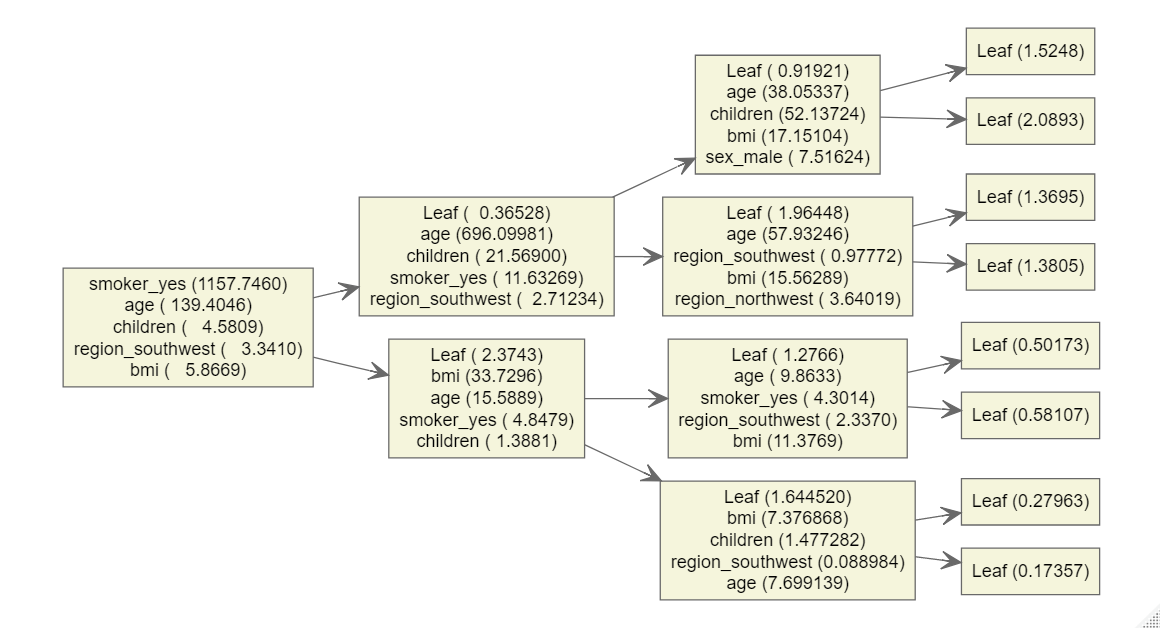

remove(vip_ins)Here one can clearly see that the trends that were observable during the EDA are reflected here again. The most influential variable is indeed the smoker variable, closely followed by the age variable. Also the bmi and the number of children seem to be relevant. To get an even better sense for where which variable was mostly used one can have a look at the visualization below which shows the final model in a compressed form as a single tree. At each node the most important (again by the feature importance score like above) 5 variables are listed with their respective raw importance scores in brackets. The ‘Leaf’ variable just corresponds to the case where trees ended at this point.

# Visualization of the ensemble of trees as a single collective unit

final_ins_boost_fit <- final_ins_boost_wflow %>%

pull_workflow_fit()

final_ins_boost_fit$fit %>%

xgb.plot.multi.trees(render = T)

# output as figure below as this returns a htmlwidget which is not that

# intuitively handled by latex (pdf output)

Figure 5.10: Visualization of the final ensemble of trees as a single collective unit.

It is quite interesting to see that most of the time the smoker feature was used just once in the first node but not multiple times. This suggests a quite linear influence of smokers and of course the binary character of the feature can support this behavior. Age on the other hand is represented in multiple depths with high importance which suggests a non-linear influence which was also visible during the EDA. Interestingly the children variable for example mostly is used at depth 3 for a split.

Also a residual plot of the XGBoost predictions on the test data set can shed some light on the way the model works. This 3D plot is an interactive html widget which works not in the pdf output but just on the website. Besides the residuals on the test set also the residuals on the training data set are shown below

# prepare the data for plotting i.e. calculate raw residuals on the test

# and the training data

resid_plot_ins_data_test <- ins_test %>%

bind_cols(pred = predict(final_ins_boost_wflow, new_data = ins_test)[[".pred"]]) %>%

mutate(resid = charges - 10^pred)

resid_plot_ins_data_train <- ins_train %>%

bind_cols(pred = predict(final_ins_boost_wflow, new_data = ins_train)[[".pred"]]) %>%

mutate(resid = charges - 10^pred)

save(resid_plot_ins_data_test,

resid_plot_ins_data_train,

file = "_data/resid_data_ins.RData")

# test residuals

resid_plot_ins_data_test %>%

plotly::plot_ly(x = ~age, z = ~resid, y = ~bmi,

color = ~factor(smoker),

symbol = ~factor(smoker),

text = ~paste("Age:", age, "BMI:", bmi),

hoverinfo = "text",

symbols = c('x','circle'),

colors = plasma(3)[2:1]) %>%

plotly::add_markers(opacity = 0.9,

size = 2) %>%

plotly::layout(scene = list(

xaxis = list(title = "Age"),

yaxis = list(title = "BMI"),

zaxis = list(title = "Raw residuals")),

title = "Raw test residuals of the insurance XGBoost model",

legend = list(title = list(text = "Smoker")))# train residuals

resid_plot_ins_data_train %>%

plotly::plot_ly(x = ~age, z = ~resid, y = ~bmi,

color = ~factor(smoker),

symbol = ~factor(smoker),

text = ~paste("Age:", age, "BMI:", bmi),

hoverinfo = "text",

symbols = c('x','circle'),

colors = plasma(3)[2:1]) %>%

plotly::add_markers(opacity = 0.9,

size = 2) %>%

plotly::layout(scene = list(

xaxis = list(title = "Age"),

yaxis = list(title = "BMI"),

zaxis = list(title = "Raw residuals")),

title = "Raw train residuals of the insurance XGBoost model",

legend = list(title = list(text = "Smoker")))For the train residuals no clear pattern in the residuals is visible. Looking at the test residuals one can see where the model has the most difficulties. Interesting enough the model has the biggest outliers with non smokers of either quite low or quite high age with a more or less not that extreme BMI. This could be due to chronic diseases especially for the younger ones but also some randomness that life holds. For example a car accident could cause such high charges. Of course it could be also due to not observed latent variables.

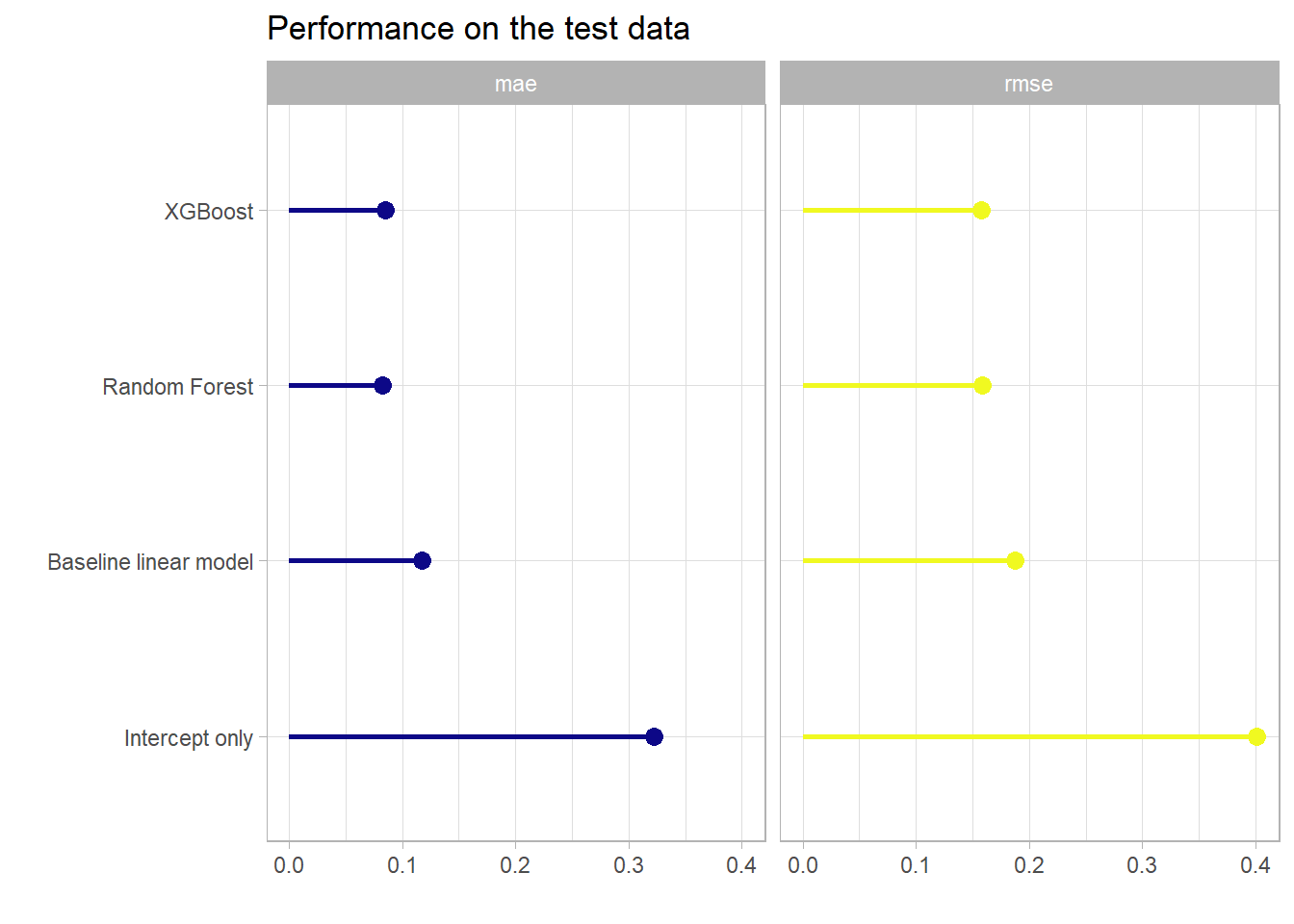

Which model performed the best on the test data set is the next interesting question. The results can be viewed below in tabular and graphical form.

| model | mae | rmse |

|---|---|---|

| boost_pred | 0.0849 | 0.1581 |

| rf_pred | 0.0820 | 0.1586 |

| baseline_lm | 0.1171 | 0.1877 |

| intercept_pred | 0.3227 | 0.4012 |

Once without the intercept only model.

So overall the XGBoost model again delivered the best performance on the test data set w.r.t. the main optimization metric RMSE. The baseline linear model that takes all features without interactions into account also goes a long way and the performance w.r.t. the RMSE metric is even comparable to the tree-based models. So again the data did not leave much room to explore more complex non-linear patterns. Whether the rather small difference in performance is worth considering such a model is of course up to the use case. Again the XGBoost model displayed that it reaches a very competitive performance with minimal pre-processing, integrated feature selection and in this case the computational effort was quite small. The major pros and cons of the tree-based gradient boosting models will be shortly reviewed in the next and final section.

Before concluding one can have a look at the predictive results other seminar participants had with their models like GAMs or GLMs. Nevertheless the model formulas and model types will not be discussed in detail at this point.

######################################################

# Visualize the results of the other seminar

# participants in order to compare the predictive results

# Insurance data set

######################################################

# best linear model and gamma regression model as proposed by

# Maximilian Fleissner

best_linear_model_ins <- lm(log(charges) ~ sex + obese +

smoker + region + children +

poly(age - 40, 2, raw = T) +

poly(bmi - 30, 2, raw = T) +

smoker:obese +

smoker:region +

smoker:children +

smoker:poly(age - 40, 2, raw = T),

data = ins_train %>%

mutate(obese = if_else(bmi > 30, "Y", "N"),

across(where(is.character),

as.factor)))

best_gamma_model_ins <- glm(charges ~ (sex + obese + smoker +

region + children +

poly(bmi - 30, 2, raw = T) +

age +

smoker:obese +

smoker:region +

smoker:age +

smoker:children),

data = ins_train %>%

mutate(obese = if_else(bmi > 30, "Y", "N"),

across(where(is.character),

as.factor)),

family = Gamma(link = "log"))

# best GAM models as proposed by Elizabeth Griesbauer

## health and family ##

gam_ins_health <- mgcv::gam(charges ~ smoker + age:children +

age:smoker +

te(bmi, by = smoker, k = 10),

family = Gamma(link ="log"),

method = "GCV.Cp", select=T,

data = ins_train %>%

mutate(children = as.factor(children),

older_than_30 =

if_else(age >= 30,

"Y", "N"),

across(where(is.character),

as.factor)))

## young adults ##

gam_ins_young_adults <- mgcv::gam(charges ~ smoker*older_than_30 +

te(bmi, by = smoker, k = 20),

family = Gamma(link = "log"),

method = "GCV.Cp", select = T,

data = ins_train %>%

mutate(children = as.factor(children),

older_than_30 =

if_else(age >= 30,

"Y", "N"),

across(where(is.character),

as.factor)))

# best Local constant regression and Local linear regression as

# proposed by Tamara Simjanoska

library(np)

bw_lc_age_smoker_bmi <- np::npregbw(log(charges) ~ age + smoker + bmi,

bwmethod = "cv.ls",

bwtype = "fixed",

regtype = "lc",

data = ins_train %>%

mutate(across(where(is.character),

as.factor)))##

Multistart 1 of 3 |

Multistart 1 of 3 |

Multistart 1 of 3 |

Multistart 1 of 3 /

Multistart 1 of 3 -

Multistart 1 of 3 \

Multistart 1 of 3 |

Multistart 1 of 3 |

Multistart 2 of 3 |

Multistart 2 of 3 |

Multistart 2 of 3 /

Multistart 2 of 3 -

Multistart 2 of 3 \

Multistart 2 of 3 |

Multistart 2 of 3 |

Multistart 3 of 3 |

Multistart 3 of 3 |

Multistart 3 of 3 /

Multistart 3 of 3 -

Multistart 3 of 3 |

Multistart 3 of 3 |

local_const_regr_ins <- np::npreg(bws = bw_lc_age_smoker_bmi)

bw_ll_age_smoker_bmi_children <- np::npregbw(

log(charges) ~ age + smoker + bmi + children,

bwmethod = "cv.ls", bwtype = "fixed",

regtype = "ll",

data = ins_train %>%

mutate(children = if_else(children %in% c(4,5), 3, children),

children = factor(children,

ordered = TRUE,

levels = c("0", "1", "2", "3"))) %>%

mutate(across(where(is.character), as.factor)))##

Multistart 1 of 4 |

Multistart 1 of 4 |

Multistart 1 of 4 |

Multistart 1 of 4 /

Multistart 1 of 4 -

Multistart 1 of 4 |

Multistart 1 of 4 |

Multistart 2 of 4 |

Multistart 2 of 4 |

Multistart 2 of 4 /

Multistart 2 of 4 -

Multistart 2 of 4 |

Multistart 2 of 4 |

Multistart 3 of 4 |

Multistart 3 of 4 |

Multistart 3 of 4 /

Multistart 3 of 4 -

Multistart 3 of 4 |

Multistart 3 of 4 |

Multistart 4 of 4 |

Multistart 4 of 4 |

Multistart 4 of 4 /

Multistart 4 of 4 -

Multistart 4 of 4 \

Multistart 4 of 4 |

Multistart 4 of 4 |

linear_linear_regr_ins <- np::npreg(bws = bw_ll_age_smoker_bmi_children)

ins_test_perf_overall <- ins_test %>%

mutate(boost_pred = predict(final_ins_boost_wflow,

new_data = .)[[".pred"]],

rf_pred = predict(final_ins_rf_wflow,

new_data = .)[[".pred"]]

# intercept_pred = ins_predict_trivial_mean(.)

) %>%

mutate(across(ends_with("_pred"), function(x) 10^x)) %>%

mutate(lm_pred = predict(best_linear_model_ins,

ins_test %>%

mutate(obese = if_else(bmi > 30, "Y", "N"),

across(where(is.character),

as.factor))),

lm_pred = exp(lm_pred),

gamma_pred = predict(best_gamma_model_ins,

ins_test %>%

mutate(obese = if_else(bmi > 30, "Y", "N"),

across(where(is.character),

as.factor)),

type = "response"),

gam_health_pred = mgcv::predict.gam(

gam_ins_health,

ins_test %>%

mutate(children = as.factor(children),

older_than_30 = if_else(age >= 30,

"Y", "N"),

across(where(is.character),

as.factor)),

type = "response"

),

gam_young_adults_pred = mgcv::predict.gam(

gam_ins_young_adults,

ins_test %>%

mutate(children = as.factor(children),

older_than_30 = if_else(age >= 30,

"Y", "N"),

across(where(is.character),

as.factor)),

type = "response"

),

local_const_pred = predict(

local_const_regr_ins,

newdata = ins_test %>%

mutate(across(where(is.character), as.factor))

),

local_const_pred = exp(local_const_pred),

local_linear_pred = predict(

linear_linear_regr_ins,

newdata = ins_test %>%

mutate(children = if_else(children %in% c(4,5),

3, children),

children = factor(children,

ordered = TRUE,

levels =

c("0", "1", "2", "3"))) %>%

mutate(across(where(is.character), as.factor))

),

local_linear_pred = exp(local_linear_pred)

)

# rmse and mae on the original charges scale

ins_test_perf_overall %>%

select(charges, ends_with("pred")) %>%

pivot_longer(-charges, names_to = "model", values_to = "pred") %>%

group_by(model) %>%

summarise(

mae = mae_vec(

truth = (charges),

estimate = (pred)

),

rmse = rmse_vec(

truth = (charges),

estimate = (pred)

)

) %>%

arrange(mae) %>%

mutate(model = as.factor(model),

model = fct_recode(model,

"XGBoost" = "boost_pred",

"Random forest" = "rf_pred",

"Linear regression" = "lm_pred",

"Gamma regression" = "gamma_pred",

"Local linear regression" =

"local_linear_pred",

"Local constant regression" =

"local_const_pred",

"GAM health & family" = "gam_health_pred",

"GAM young adults" = "gam_young_adults_pred"),

model = fct_reorder(model, -mae)) %>%

pivot_longer(-model) %>%

group_by(name) %>%

mutate(min_val = min(value)) %>%

ungroup() %>%

ggplot(aes(x = value, y = model, col = name)) +

geom_point(shape = 16, size = 3) +

geom_segment(aes(y = model, yend = model,

x = min_val,

xend = value),

size = 1) +

scale_color_viridis_d(option = "C") +

labs(x = "",y = "", title = "Performance on the test data") +

theme_light() +

theme(legend.position = "None") +

theme(panel.spacing.x = unit(6, "mm")) +

facet_wrap(~name, scales = "free_x")

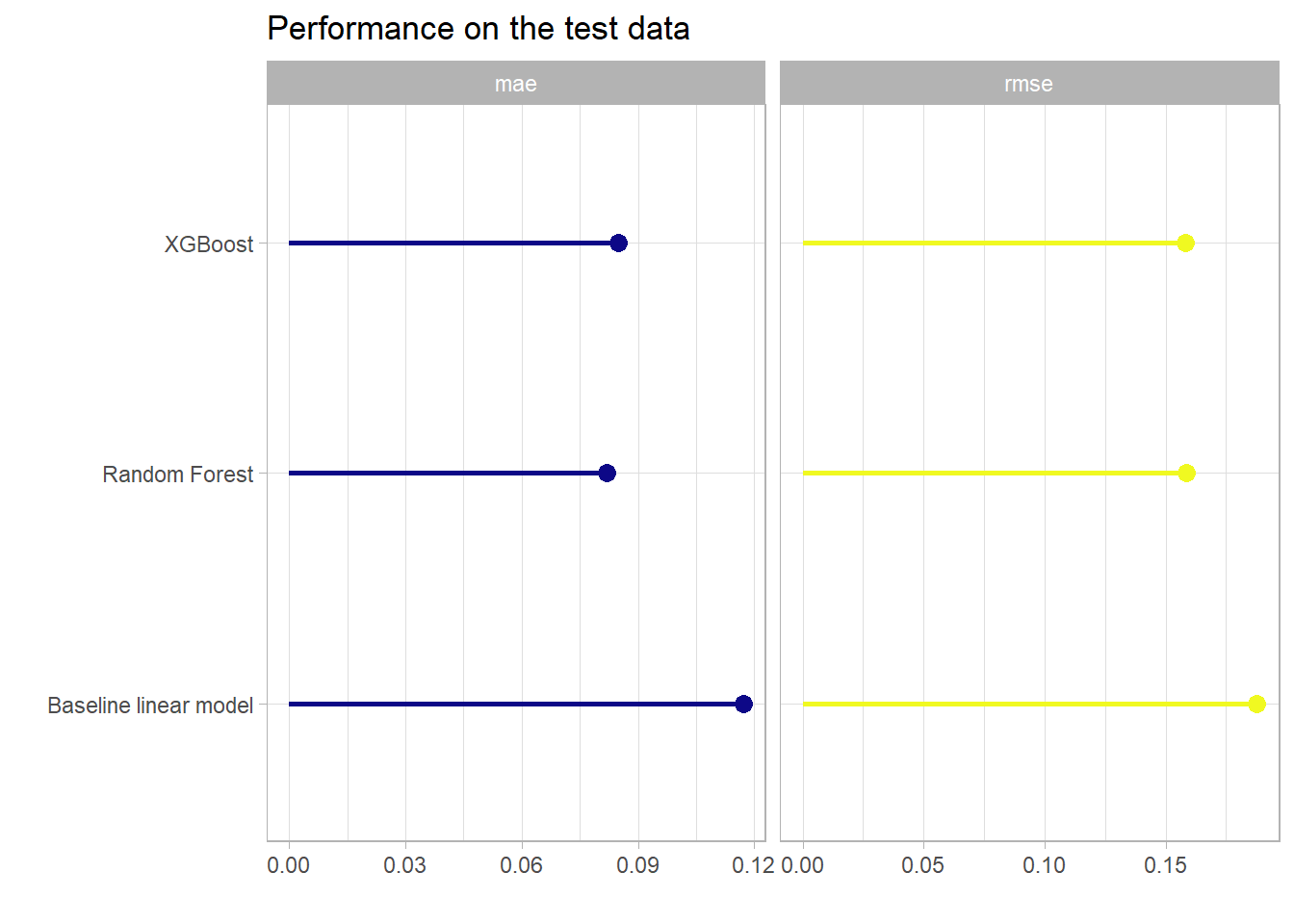

One can see here again that the tree based gradient boosting approach achieves a very competitive predictive performance with respect to the other proposed models on this data set. Besides that the observations from above are applicable here once again. Another notable fact is, that on the original scale w.r.t. the RMSE the Random Forest model can slightly outperform the XGBoost model. This finishes the applied part and opens the door for the final chapter which summarizes the most important takeaways.